I like to solve hard problems, which is good since that's my job. This involves significant analysis, and complex problem-solving time and time again.

I like to solve hard problems, which is good since that's my job. This involves significant analysis, and complex problem-solving time and time again.I have been sharing some of the details of the complex problem solving required to produce features in Painter, but for once I will discuss a tiny, almost lost bit of work I did at my current job. This involves a problem I have been seeking to solve for at least ten years: the lofting problem.

You might be asking yourself how I can talk about it, since it involves my current work. The answer is that this work is now in the public domain, since the process I am about to describe is detailed quite explicitly in US Patents 7,227,551 and 7,460,129. It is also not particularly secret or unavailable since it can be had by using Core Image, part of Mac OS X, as I will indicate.

The Lofting Problem

|

| A Text Mask to be Shaded |

While working on something quite different, another employee, Kok Chen, and I came across something truly amazing.

We solved the lofting problem.

|

| The Wrong Answer |

Here is the result. To do this, I used Apply Surface Texture using Image Luminance, and cranked up the Softness slider.

But all this can do is soften the edge. It can't make the softness sharper near the edge, which is required to get a really classy rendering.

|

| The Right Answer! |

Think of a piece of rubber or flexible cloth tacked down to a hard surface at all points along its edge, and then puffed full of air from underneath: lofted.

I think you get the point. The difference is like night and day! Before I implemented this in Core Image, this was not an easy effect to achieve, and probably couldn't even be done without huge amounts of airbrushing.

In Core Image, you might use the Color Invert, Mask To Alpha, Height Field From Mask, and Shaded Material effects to achieve this. And you might have to use a shiny ball rendering as the environment map, like I did. By the way, you can mock up most of this in Core Image Fun House, an application that lives in the Developer directory. Core Image is a great tool to build an imaging application. Just look at Pixelmator!

But How Was This Solved?

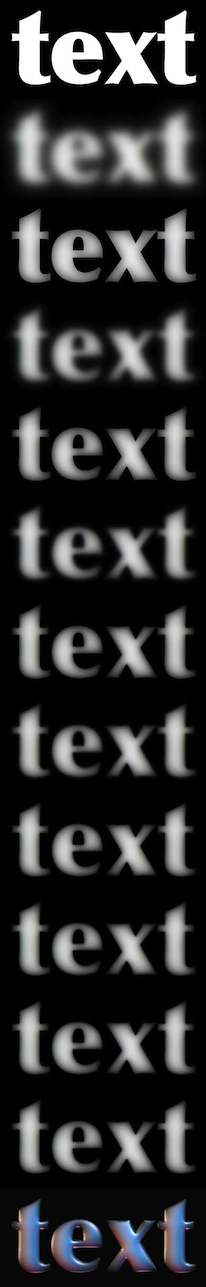

Exactly! This was a very interesting problem in constraints. You see, the area outside the text is constrained to be at a height of zero. The area inside the text is initialized to be a height of 1. This is the first image in sequence.

Exactly! This was a very interesting problem in constraints. You see, the area outside the text is constrained to be at a height of zero. The area inside the text is initialized to be a height of 1. This is the first image in sequence.Now blur the text with an 8-pixel radius. It creates softness everywhere, and puts some of the softness outside the text area, because blur actually is something that causes shades to bleed a bit. This is the second image in sequence.

Then you clamp the part outside the text to be zero height again. This recreates a hard edge, and creates the third image in sequence. I have recreated this process in Painter. The clamping was done by pasting in the original mask, choosing a compositing layer method of darken, and dropping the layer, collapsing it back onto the canvas.

Next I take that same image and blur it by a 4-pixel radius. This produces the fourth image in sequence.

I clamp it back to the limits of the original text by pasting, choosing a darken layer method, and dropping, producing the fifth image in sequence.

Notice after the clamp step, the edge remains hard but the inside is becoming more accommodating to the edge, yet remains smooth in its interior.

With the next images in the sequence, I blur with a 2-pixel radius, clamp to the edge of the original mask, then blur with a one pixel radius and clamp to the hard edge of the original mask again to get the final lofted mask.

The last image in sequence is the shaded result, using Apply Surface Texture in Painter.

I should confess that these images are done at a larger scale (3X) and then down sampled for your viewing pleasure. Also, Painter only keeps 8 bits per channel, so the result was a bit coarse, and had to be cleaned up using the softness slider in the Apply Surface Texture effect.

In Core Image, we use more than 8 bits per component and thus have plenty of headroom to create this cool effect at much higher resolution and with fewer artifacts.

It becomes clear that the last several frames of this sequence look practically the same. This is because the blurs are smaller and the effect of the clamping against the edge of the original mask is less prominent. Yet these last steps become very important when the result is shaded as a height field, because the derivatives matter.

Smart People and Hard Problems

It should seem obvious, but it really does take smart people to solve hard problems. And a lot of work. And trial and error. So I'm going to tender a bit of worship to the real brains that helped shepherd me on my way through life and career. To whom I owe so much. And I will explain their influences on me.

The first was Paul Gootherts. Paul is an extra smart guy, and, during high school, Paul taught me the basics of computer programming. He, in turn, got it from his dad, Jerome Gootherts. A competent programmer at 16 (or earlier, I suspect), I owe my livelihood to Paul and his wisdom; he also conveyed to me something that (I think his uncle told him): if you are good at one thing, you can find work. But if you can be good at two things, and apply what you know and cross-pollenate the two fields, then you can be a real success. I applied this to Painter, and almost all of my life. And perhaps someday I'll apply it to music as well. Paul helped me with problems in computation and number theory, and his superb competence led me to develop some of my first algorithms in collaboration with him.

The second was Derrick Lehmer, professor emeritus at UC Berkeley. He taught me that, though education is powerful, knowledge and how you apply it is even more powerful. And it's what you do with your life that is going to make the difference. This has guided me in far-reaching ways I can only begin to guess at. Professor Lehmer ("Don't call me Doctor; that's just an honorary degree") helped me with understanding continued fractions and their application to number theory and showed me that prime numbers and factorization are likely to remain the holy grail of mathematical pursuits. Oh, and his wife Emma (Trotskaia) Lehmer, who was also present, provided many hours of interesting conversation on our common subject: factoring repunits (numbers consisting of only ones). I must credit a few of my more clever number theoretic ideas to her.

Other smart people have also provided a huge amount of inspiration. Tom Hedges had a sharp eye and an insightful mind. He taught me that no problem was beyond solving and he showed me that persistence and a quick mind can help you get to the root of a problem. Even when nobody else can solve it. Tom helped me with Painter and validated so much that I did. Without Tom, it would have been hard to ship Painter at all!

I have probably never met as smart a person as Ben Weiss. When the merger between MetaTools and Fractal Design took place, I became aware of his talents and saw first hand how smart this guy is. He can think of things that I might never think of. He confirmed to me that the power of mathematical analysis is central to solving some of the really knotty problems in computer graphics. And he proceeded to famously solve the problem of making the median filter actually useful to computer graphics: a real breakthrough. I've seen Ben at work since Metacreations, and he is still just as clever.

While Bob Lansdon introduced me to Fourier-domain representation and convolutions, it was really Kok Chen that helped me understand intuitively the truly useful nature of frequency-domain computations. He introduced me to deconvolution and also showed me that there were whole areas of mathematics that I still needed to tap to solve even harder problems. Kok retired a few years back and I sincerely hope he is doing well up north! Kok basically put up with me when I came to Apple and helped me time and time again to solve the early problems that I was constantly being fed by Peter Graffagnino. When it came time to work with the GPU and later with demosaicing, Kok Chen consistently proved to be indispensable in his guidance.

There are plenty other people who I will always refer to as smarter than myself in various areas, but they will have to go unnamed until future posts.